This is the 4th and last part of a single post (1, 2, 3)

For all the things in our life, we constructed boundaries that sometimes are completely artificial with not much actual sense in the reality of things, presenting more and more false dilemmas

This series of posts is of course about user interfaces, and this last post is about two common beliefs about applications and UIs that I think are false distinctions (and particularly care of)

Workspace? Application?

The boundary between the workspace and the applications is somewhat artificial, as is the boundary between one application or the other. Is there a common function that most applications share?

If so whether it appears in the application window, in the workspace or as part of another application is just a matter of what are usability findings for that action in particular (see Share Like Connect in Plasma Active).

How much two applications have to be considered separated if they share most of their components? or are just two tools of the same procedure? the knife and the spoon of the dish application?

In the end how much corresponds the separation I see in windows? is what i see as different tasks in the taskbar actually a different task? We should think more about different semantic task rather than different process/window that is an implementation detail.

Everything is just part of the same tool, the same system.

But is system == the machine? No!

Desktop/phone/tablet/TV…

Even distinction between devices and type of devices is artificial: my work spreads trough multiple machines, some more capable than others.

I won’t be able to do the most complex data creation operations on a phone, but I can view that work and create something, so on a mobile device I don’t want a completely alien set of tools, and i want to be able to access the very same resources.

- I want everything as much synchronized as possible

- I don’t want to set up the very same mail accounts on every device

- If I have a movie on my desktop I want it to be available on my tablet

- And if I press “play” on my tablet I want to see it on my TV

- And i want zero effort to do that, not having to play around with settings and shared folders for hours.

I also want as much as possible of the very same visual language shared between all my devices. There are differences in input methods and screen sizes (in few years differences between pixel densities will be nullified), but most of the aestetics, a part of the behavior and most of the applications should be shared between all the devices, even if with more faces.

I don’t want to hear anymore KDE mobile effort versus the desktop: they are the same system. They are an unity, a spectrum that scales smoothly without really distinct interruptions.

Devices used to be very different from one to another, but in the next years differences will be less and less, in 1-2 years probably 100% of the sold computers, desktop or not will have a touch screen, we will see as big X86 tablets as very small ARM laptops. the input method and the mobile-ness won’t be a boolean anymore, they will be a real, from the big desktop(that is definitely not going away) to the small phone there will be a million of in-between thinghies.

And this is a false dichotomy dying right now, a distinction that existed for technical reasons (small mobile processors not having enough power) is fading away, we better adapt or die as well.

We are seeing different approaches in software to be ready to this paradigm shift in hardware; both Windows 8 and OSX are trying to “mix” desktop and mobile paradigms. Here the problem (especially visible in Windows 8) is that the transition from different devices is too abrupt, you end up with an ui designed for a different kind of input method from what you are using (thik about Metro start screen on a 24″ monitor).

So:

- One size does not fit all.

- There aren’t anymore well defined boundaries for different devices.

- The UI should be like lego, every little piece should be interchangeable to do a million different combinations: small tablet, large one, laptop with touchscreen, small laptop with a mouse, tablet dockable to a mouse/keyboard station, whatever we don’t imagine yet…

To do a synopsis of this last post of the series, a phone and a desktop are the same system.

There are the Bob’s and the John’s systems, not the tablet system and the laptop system, the only difference between two systems is belonging to different people, being tailored around different lives.

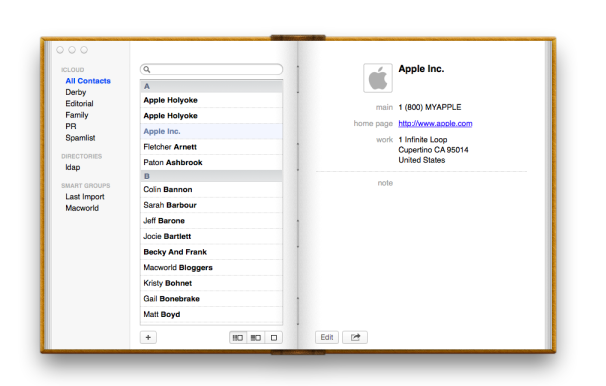

To conclude, by the way here is the complete version of the mockup that srarted this long brain dump: