Plasma has been designed from the get go (2006 or so.. it seems at least 2 eternities ago 🙂 ) to not make any assumptions on the type of device and to do a clear separation between the core technology/runtime and the various GUI plugins that end up implementing a full desktop experience.

In an architecture decision informed by previous prototypes we did in KDE4 times for mobile devices UIs, in Plasma 5 we split it further and introduced the concept of a “shell package” which lets further customization between devices than what Plasma in KDE4 times allowed.

Because of that we could do the Plasma Mobile shell without changes to the architecture that runs both the Desktop shell and the mobile version, despite being a completely different UI.

While working on different shells such as Plasma mobile and the Mycroft voice assistant (more on that later) we noticed that the best possible help for building a shell for a new type of device from the ground up is a very minimal shell that doesn’t make many assumptions about the final gui, but just provides few building blocks that will be in the end customized by the developer.

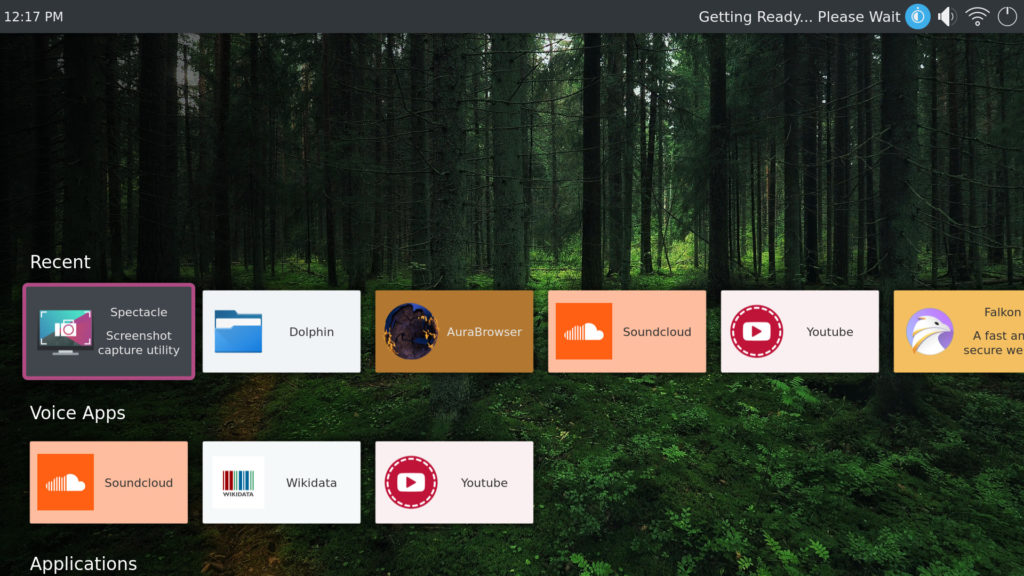

Enter Plasma Nano. Plasma Nano is a minimal shell not intended for end users which the developer will extend to match the final desired user experience (think about subclassing in object oriented languages)

The normal television set is becoming more and more from just a “dumb monitor” to a full featured computer system. But for several factors its UI must be completely different from both a desktop system, and from a mobile system.

Those so called 10 foot user interfaces have some particular constraints. The screen will always be seen from pretty far (altough you can’t be sure exactly the distance the users will look at it) so every graphical element must be very clear and big enough to be seen from a distance (indeed ~10 feet / 3 meters or so) so very big text, clear and spaced (too high information density should be avoided)

Every control should be accessible with the least number possible of buttons. It should be easily controllable with just the remote control, and in particular with just arrow keys, and “ok” button and one to go back. Often tv remote controls have a plethora of buttons, tough which makes the user experience only more confusing.

Another important way of interaction recently in smart tvs to go around the lack of complex input methods is voice control. It is ideal there as the commands to the TV would be very basic like “watch this series on Netflix” and things like that. This of course is giving birth to a slew of very legitimate privacy concerns, but this technology is too good and convenient to throw it away because the current implementations are done by less than honest corporate giants.

Mycroft is an open source project which is aiming to build a fully opensource voice-based personal assistant with a growing number of skills.

Understanding voice is in two independent parts: speech to text (going from the sound file of the recorded voice to a normal string of letters) and actual semantic understanding of the sentence to then translate it to a concrete action (fetching weather data, a Youtube video and so on). The latter part is the bulk of the work for a personal assistant and is what Mycroft implements. it can then use an external service for speech to text.

It can use a variety of services, from Google (speech to text, not full assistant) to a different number of free and proprietary services. In the end, they want to use the Mozilla Deepspeech project, which would make the stack 100% free. You can already configure it to use deep speech or other engines, even if not fully ready yet (want to help out? Mozilla is looking for voice samples to make their product better and ready for mass consumption!)

A voice assistant looks better with a GUI part as well, as the echo show and google assistant demonstrate.

Some of us worked together with the Mycroft people to produce an extensive set of QML bindings for the Mycroft system, (that will provide as wellthe user interface for future Mycroft based smart speakers). They’re a third-party QML module that can be freely used by any Qt-based applciation for voice integration.

Plasma Bigscreen can optionally use those QML bindings to provide parts of the QML UI of the user experience (so yes you can ask via voice to your TV if it’s going to rain tomorrow or to watch the music video of so-and-so on YouTube)

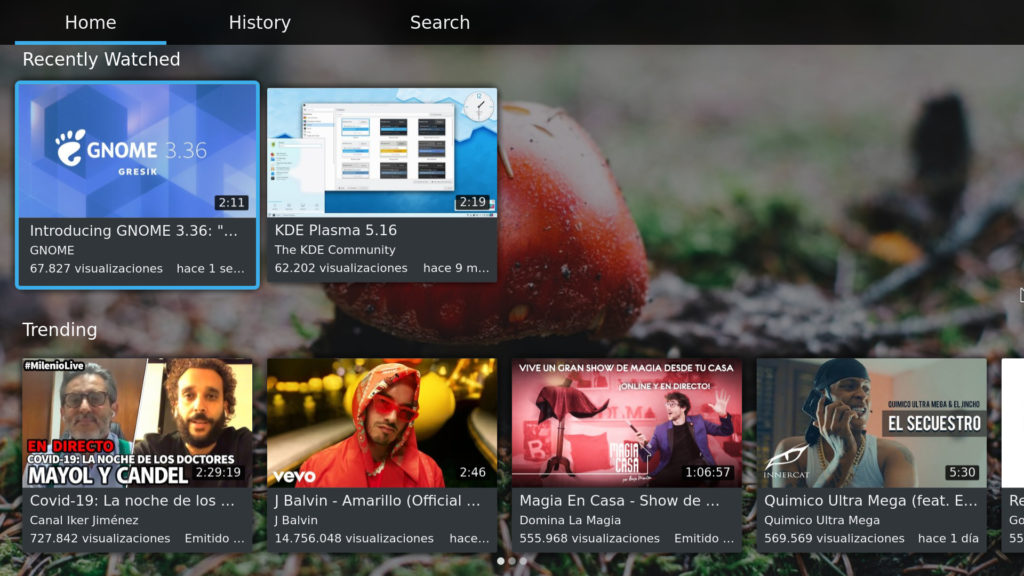

Those QML bindings can provide from Mycroft a variety of GUI features, from simple notifications like a clock if you ask what time it is, to a full featured interactive app, like we did for the Youtube skill, which is a Youtube browser app which can be used both via the voice only, the remote control only, or a combination of both and provides a footprint for future rich voice-interactive user interfaces.